Mainframe modernization is like a balloon payment on your 7 year ARM mortgage that has come true or an unhedged call option that has been called. [bad-code-isnt-technical-debt-its-an-unhedged-call-option](https://www.higherorderlogic.com/2010/07/23/bad-code-isnt-technical-debt-its-an-unhedged-call-option/). All code is technical debt and it is critical to understand the risk profile of your debt as you embark on a mainframe modernization project. [risk-profile-of-technical-debt](https://tanzu.vmware.com/content/intersect/risk-profile-of-technical-debt). Also see derivatives of technical debt for a detailed treatment of this topic.

Sticking with the financial analogy your payment is due, your option has expired and you are due a large amount. Our natural instinct is to to find an easy way out. Perhaps get a payday loan or swap out one kind of debt for another. Unfortunately none of these options work in the long term. To avoid bankruptcy we have to go through a debt restructuring or program where we retire and payout gradually the debt owed over time or in extreme cases declare bankruptcy. So what does all of this have to do with mainframe modernization ?

There are many enticing options when it comes to mainframe modernization like offloading work to cheaper processors on the mainframe, getting volume discounts from your mainframe provider, slapping REST APIs on top of the mainframe systems, COBOL to Java Code code generators or outsourcing the refactoring and rewrite of code (debt) outside the company. These efforts generally are well intentioned and start well but quickly get stuck in the mud because they don't scale or the complexity and sustainability of the solution does not work.

At VMware Pivotal Labs we acknowledge that mainframe modernization is hard. The implementation of the program gets worse before it becomes better as concurrent development work streams have to be maintained both for the legacy and net new. Having helped multiple customers on this journey we have come up with a iterative phased approach to mainframe modernization that scales and yields ROI in days and weeks and not months and years.

1. Start with the end in mind. What is the critical business event or situation that has triggered the modernization. It is very important to understand why the modernization program is being funded so that we can create the right set of goals, objectives and key results. Are you doing because you cannot add features fast enough to the critical system running on the mainframe. Are you doing this because you need a new digital 360 degree experience for your customers ? What are the key business drivers for the modernization. This alignment needs to be driven by both business and technology executives and refinforced by all the product owners, product managers and Tech. Leads. **The outcome of this phase is clearly articulated set of goals and objectives with quantified key results that provide the journey markers to understand if the program is on track.**

2. After goal alignment it is time to take an inventory of the business processes of the critical systems running on the mainframe. The business domain has to be analyzed and broken down into discrete independent business capabilities that can be modeled as microservices. This process of analyzing the core business domain and deriving modeling its constituent parts is called Event Storming. Event Storming enables decomposing massive monolith systems into smaller more granular independent software modules aka microservices. It allows for modeling new flows and ideas, synthesizing knowledge, and facilitating active group participation without conflict in order to ideate the next generation of a software system. Event Storming a group collaborative modeling exercise is used ot understand the top constraints, conflicts, inefficiencies of the system and reveal the underlying bounded contexts The seams of the current system will tell us about how the new distributed system should be designed. We also weaven in aspects of XP here like UCD, Design Thinking and interviews to ensure that our understanding of the system to keep it real. **The outcome of this phase is a set of service candidates also called as bounded contexts that represent the business capabilities of the core, supporting and generic business domain.**

3. A critical system on the mainframe like commercial loan processing or Pharmacy Management has multiple end to end workflows. It is critical to understand all the key business flows across the event storm that provide a steel thread for modernization. We need to prioritize these key flows as they will drive out the system design. The first thin slice picked should be a happy path flow that provides end to end value and demonstrates incremental progress and redoubles the faith in the whole process. **The outcome of this phase is a set of prioritized thin slices that encompass the Event Storm.**

4. We have all the lego pieces, now its a matter of putting them together with flows of messaging, data, APIs and UIs so that we fulfill the needs fo the business. We now have to wire up all our domain services. We use a process called Boris invented at Pivotal for this phase [SWIFT](https://www.swiftbird.us/). Boris provides a structured way to design synchronous API driven and asynchronous event-driven service context interactions. We identify relationships between services to reveal the notional target system architecture and record them using SNAP. SNAP takes the understanding from the Boris diagram to understand the specific needs of every bounded context under the new proposed architecture. The SNAP exercise is done concurrently with the Boris exercise. We call out APIs, data needed, UIs, and risks that would apply to that bounded context as the thin slice is modeled across services. **The outcome of this phase is a Notional Target Architecture of your new system with external interaction modes mapped out in terms of messaging, data and APIs.**

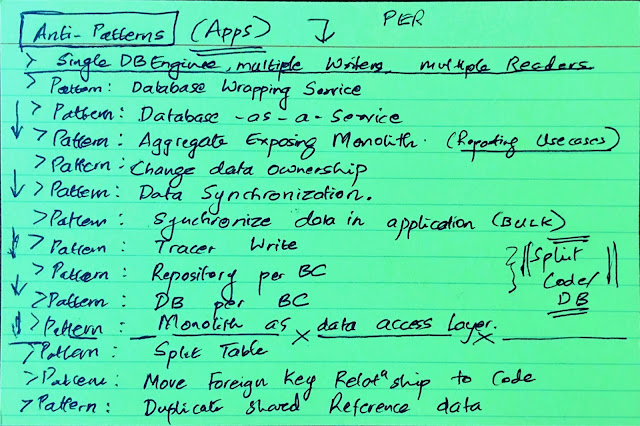

5. In some ways this process is like bringing a plane at 30000 feet to the ground. We are in full descent now and at the 10K feet level. At this point we have a target architecture. It is critical now to understand how we will develop these the old and new systems without disruption i.e. change the engine while the plane is descending to the ground. We employ a set of key tactical patterns for modernization like Anti-Corruption Layer, Facade, Data driven strangler to carve out a set of MVPs and user stories mapped to the MVP. These stories will realize the SNAP built earlier and implement the thin slices that were modeled. Creating a road map of all the quantum of work is critical as we start to make sure we are going in the right direction with speed. **The outcome of this phase is a set of user stories for modernization implementing the tactical pattern of co-existence with the monolith and a set of MVPs to track the key results.**

6. We no have a backlog of user stories and we are less than 1000 feet from the ground. At this point it is important to identify the biggest spikes and risks to the technical implementation like latency, performance, security, tenancy etc., and resolve them. We start building out contracts for our APIs so that other teams and dependencies may get unblocked. The stories are organized into Epics at Inception, product managers and engineers are allocated and the first iteration begins. The feedback loop from Product - Engineering - Business is set in motion. **This phase encompasses the first sprint or iteration of development. It is critical to establish demos, Iteration Planning Meeting, retrospectives and feedback loops in this phase as this will set a tone for the rest of the project. **

The six steps of mainframe modernization outlined here are not implemented like a waterfall. Six steps are sometimes run multiple times for different areas of a complex domain for a large domain and the results are stitched together. Steps or phases may be skipped altogether if we already know parts of the domain well. This six step process is what we call SWIFT. It is not dogmatic. Do what works at velocity to modernize the system in increments with a target architecture map in hand. Mainframe modernization is hard and there is no easy way out. Internalize this and start the journey of thousand miles swiftly with the first step.